Quick docker summary¶

Why¶

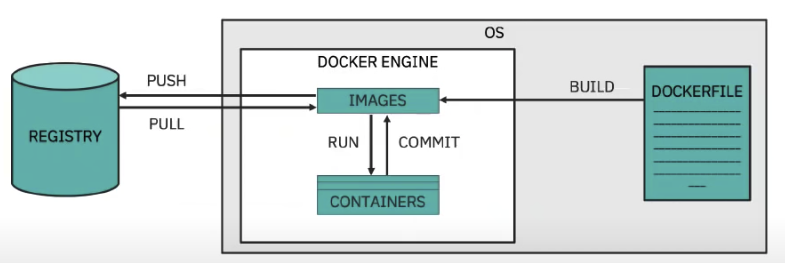

Lower memory consumption than VM. Define configuration, dependencies, command in a unique file (Dockerfile). It uses registry to store images. Image is like a VM image. Container is a running instance of an image. Docker engine helps to manage the container life cycle and exposes API for the CLI.

Value propositions for container¶

Just to recall the value of using container for the cloud native application are the following:

- Docker ensures consistent environments from development to production. Docker containers are configured to maintain all configurations and dependencies internally.

- Docker containers allows you to commit changes to your Docker image and version control them. It is very easy to rollback to a previous version of your Docker image. This whole process can be tested in a few minutes.

- Docker is fast, allowing you to quickly make replications and achieve redundancy.

- Isolation: Docker makes sure each container has its own resources that are isolated from other containers

- Removing an app/ container is easy and won’t leave any temporary or configuration files on your host OS.

- Docker ensures that applications that are running on containers are completely segregated and isolated from each other, granting you complete control over traffic flow and management

The container filesystem is represented as a list of read-only layers stacked on top of each other using a storage driver. The layers are generated when commands are executed during the Docker image build process. The top layer has read-write permissions. Docker daemon configuration is managed by the Docker configuration file (/etc/docker/daemon.json) and Docker daemon startup options are usually controlled by the systemd unit: docker. With environment variables you can control one container, while using linked containers, docker automatically copies all environment variables from one container to another.

Dockerfile¶

ENTRYPOINT specifies the default command to execute when the image runs in a container CMD provides the default arguments for the ENTRYPOINT instruction

Example to build a custom Apache Web Server container image.

FROM ubi7/ubi:7.7

ENV PORT 8080

RUN yum install -y httpd && yum clean all

RUN sed -ri -e "/^Listen 80/c\Listen ${PORT}" /etc/httpd/conf/httpd.conf && \

chown -R apache:apache /etc/httpd/logs/ && \

chown -R apache:apache /run/httpd/

USER apache

EXPOSE ${PORT}

COPY ./src/ /var/www/html/

CMD ["httpd", "-D", "FOREGROUND"]

Best practices for writing Dockerfiles.

How to run without Docker Desktop¶

- Install a VM hyperviser:

brew install hyperkit - Install minikube:

brew install minikube - Install CLIs

- Use minikube:

minikub start --vm-driver=hyperkit - Get docker env of the minikube:

minikube docker-envor bettereval $(minikube docker-env)so env variables are set to be used by the docker CLI. Evaluating the docker-env is only valid for the current terminal. - Use docker CLI, it should contact the docker daemon running inside minikube.

- If the minikube pods are not able to contact internet add a names

Some docker and docker compose tricks¶

Tricks¶

- Modify the PATH:

ENV PATH="/opt/ibm/db2/V11.5/bin:${PATH}"

Run a ubuntu image¶

This could be a good approach to demonstrate a linux base app.

docker run --name my-linux --detach ubuntu:20.04 tail -f /dev/null

# connect

docker exec -ti my-linux -v $(pwd):/home bash

# Update apt inventory and install jdk, maven, git, curl, ...

apt update

apt install -y openjdk-11-jre-headless maven git curl vim

# You have a linux based development environment

This project includes a Dockerfile-ubuntu to build a local image with the above tools.

Docker volume¶

For mounting host directory, the host directory needs to be configured with ownership and permissions allowing access to the container.

Reclaim disk space¶

what-to-do-when-docker-runs-out-of-space post

Docker network¶

docker network list

# create a network

docker network create kafkanet

# Assess which network a container is connected to

docker inspect 71582654b2f4 -f "{{json .NetworkSettings.Networks }}"

> "bridge":{"IPAMConfig":null,"Links":null,"Aliases":null,"NetworkID":"7db..."

# disconnect a container to its network

docker network disconnect bridge 71582654b2f4

# Connect an existing container to a network

docker network connect docker_default containernameorid

Inside the container the host name is in DNS: host.docker.internal. The other solution is to use --network="host" in docker run command, then 127.0.0.1 in the docker container will point to the docker host.

Start a docker bypassing entry point or cmd¶

or use the command after the image name:

Docker build image with tests and env variables¶

Inject the environment variables with --build-arg

docker build --network host \

--build-arg KAFKA_BROKERS=${KAFKA_BROKERS} \

--build-arg KAFKA_APIKEY=${KAFKA_APIKEY} \

--build-arg POSTGRESQL_URL=${POSTGRESQL_URL} \

--build-arg POSTGRESQL_USER=${POSTGRESQL_USER} \

--build-arg POSTGRESQL_PWD=${POSTGRESQL_PWD} \

--build-arg JKS_LOCATION=${JKS_LOCATION} \

--build-arg TRUSTSTORE_PWD=${TRUSTSTORE_PWD} \

--build-arg POSTGRESQL_CA_PEM="${POSTGRESQL_CA_PEM}" -t ibmcase/$kname .

Docker compose¶

Docker compose helps to orchestrate different docker containers and isolates them with network. Examples of interesting docker-compose file:

- Kafka Strimzi

- Kafka Confluent

-

An interesting option to start the container is to build the image if it does not exist locally: